Express yourself

Faculty brings research to life with assistance from the Technology Transfer team

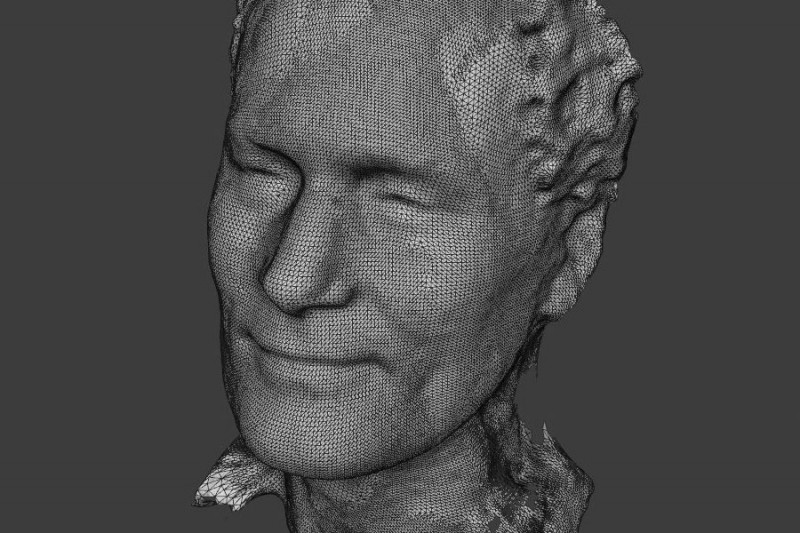

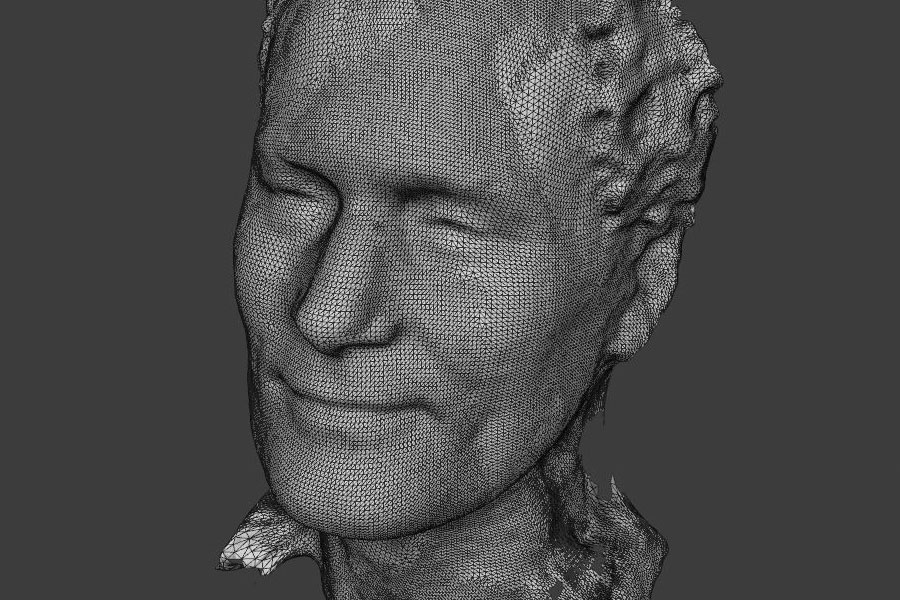

From bestselling videogames like The Last of Us to blockbuster movies like Marvel’s Avengers series, 3D models enhance audience immersion. Computer-generated elements are manipulated to illustrate emotion in increasingly realistic ways.

While the use of 3D models in media is commonplace today, drastically improving in quality year after year, it wasn’t that long ago when such technology seemed like something out of science fiction.

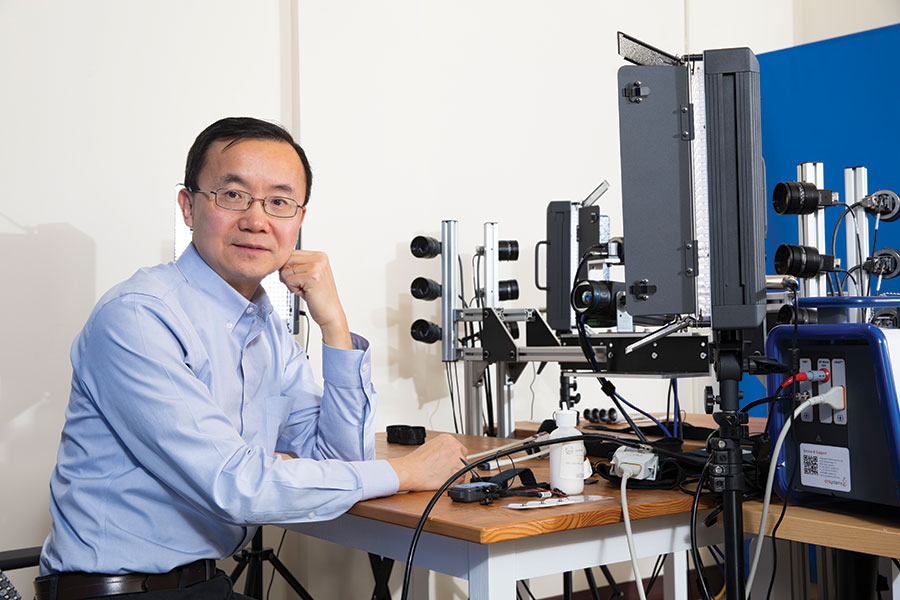

Binghamton University computer science professor Lijun Yin has been — and still is — a pioneer in the ever-evolving field of 3D modeling, conducting research with real-world applications beyond the sphere of entertainment.

Yin began working on 3D facial models in the early 2000s. At the time, other researchers studied human facial expressions using static 2D images or video sequences. However, subtle facial expressions are not easily discernible through a limited 2D perspective, a problem Yin saw as an opportunity.

“I thought, ‘Why can’t we use 3D models to research human emotions?’ Actual conversations happen in 3D space,” Yin says.

Worldwide interest

With the assistance from a grant, Yin and fellow researchers developed a 3D facial expression database, called BU-3DFE. Using single-shot cameras, Yin and company created 2,500 facial-expression models from 100 subjects, an ethnically diverse group of participants made up of Binghamton University students and faculty.

Yin disclosed his research to Binghamton’s Office of Entrepreneurship and Innovation Partnerships, whose Technology Transfer team manages the intellectual property created in University labs. As interest in the database skyrocketed, the team established a program to make it available to scientists and engineers around the globe.

This groundbreaking research in the field of facial models and expressions has proven popular, having been cited over 1,000 times. The overwhelming interest showed Yin and his team the vast array of applications where the database could be utilized.

“We saw that we would be able to help applications in various fields such as security machine vision, biometrics and counseling,” Yin says. “We realized that our data held huge value; our database became the benchmark.”

In 2008, Yin prepared to release a second database that leveraged advances in technology since his initial research in early 2000. The new version captured facial-expression models using 25 frames-per-second video footage. This set was eagerly anticipated by the research community and had huge potential for use by industry.

Scott Hancock, Binghamton University’s director of intellectual property (IP) management and licensing, decided, along with his team, that there was an opportunity to disseminate the benefits of this research by offering development licenses to the database.

“We started licensing in the academic realm. Then more and more companies took licenses, including some major players across multiple industries,” Hancock says. “The databases became an invaluable tool to train algorithms and measure their performance.”

Finding real emotions

With a rising number of licenses granted for both databases, Yin looked toward a third iteration that would expand functionality.

For both previous databases, participants were asked to perform emotions such as fear, sadness or happiness, meaning that all of the emotions were performative rather than genuine. To make the data more useful to fellow researchers, Yin partnered with Binghamton University psychology professor Peter Gerhardstein and artist-in-residence Andy Horowitz (an actor and founder of the acrobatic dance team Galumpha) to help elicit spontaneous facial expressions for his third dataset, BP4D.

Using a proprietary technique, Yin and colleagues collected a dataset of authentic facial expressions. This dataset, nearly 2.6 terabytes (TB) in size, then went to the University of Pittsburgh for further processing.

A fourth database, BP4D+, is a collaboration with Rensselaer Polytechnic Institute. It offers a multimodal extension of the BP4D database and contains synchronized 3D, 2D, thermal and physiological data sequences (such as heart rate, blood pressure, skin conductance and respiration rate). Yin currently is working on a yet-to-be-released database that will address social contexts.

While each of Yin’s databases have improved on the previous one, all of them remain popular. According to Scott Moser, a technology licensing associate on Binghamton University’s Technology Transfer team, “3D modeling is really picking up traction, showing how advanced Professor Yin’s initial research really was for its time.”

Yin has done most of the promotional activity for his databases through self-marketing and publications that have helped to stir popularity within the academic sphere.

Researchers use Yin’s databases in a variety of interesting ways. The data aids safety studies that recognize facial expressions that can map instances of unsafe or agitated driving.

In studies of human health, the databases can be paired with traditional biomarkers for more accurate diagnoses and treatments. For instance, at a doctor’s office, patients point at a chart and select a level of pain between 1 and 10, which makes it possible for them to either exaggerate or downplay their conditions. With facial-recognition technology, however, a patient’s true level of discomfort potentially could be calculated.

Yin imagines the possibilities of his facial recognition technology, and he has ambitions to turn science fiction into reality: “Maybe someday in the future, we could conduct an interview without sitting face to face. In virtual space, we could make eye contact. Maybe we could even shake hands virtually.